Hazelcast Enterprise Edition Feature

This guide explains how to migrate data in maps or JCache data structures from a running IMDG 3.12.x cluster to a Hazelcast 5.x cluster.

Requesting the Migration Tool

Migrating data between clusters relies on a specialized variant of Hazelcast’s WAN replication feature called the migration tool. Before you can start migrating data, you must request this tool from the Hazelcast team.

If you’re using an Enterprise cluster (IMDG Enterprise or IMDG Enterprise HD), contact [email protected] to request the migration tool. If you’re not using an Enterprise cluster, contact [email protected].

The Hazelcast team will prepare and send you the migration tool as a special distribution library, i.e., a JAR file.

Before you Migrate

The migration tool does not take into account migrating clients. You will have to adapt your code to use 5.0 clients, run both 3.12.x and 5.x clients and clusters side by side, and gradually transfer traffic from 3.12.x clients to 5.x clients. See the Client Migration section on this page.

The migration tool does not provide any way to avoid recompiling code between Hazelcast 3.12.x and 5.x, be it the code using members or client instances. It only migrates the member data and does not provide any 3.12.x compatible API on a 5.x member or client.

The migration tool provides a migration path from a Hazelcast 3.12.x cluster to a 4.x or 5.x cluster, not from any previous 3.x versions. See the Limitations and Known Issues section on this page.

Terminology

The migration tool introduces a new type of Hazelcast member instance. We will distinguish between two types of members: migration and plain members.

The migration members are the instances which are available as non-public releases and are able to accept connections and packets from members of a different major version. They use more memory, have higher GC pressure and CPU usage in certain scenarios, all to be able to process messages from two different major versions.

The migration members have a compatibility serialization service that knows how to deal

with classes whose serialization changed across 3.12.x and 5.x versions, e.g. Strings.

|

Plain members are the regular public releases with a minimal amount of compatibility code.

The plain members are not able to accept connections and process messages from members of another major version. However, you might combine plain and migration members to form a single cluster.

In other words, migration members are simply new non-public Hazelcast releases which are compatible with plain 3.12.x or 5.x members.

Using the Migration Tool

You can start a migration member, from the 5.6.0-migration.jar as you would a plain Hazelcast

member. See the Install Hazelcast and

Start a Cluster sections.

Example Migration Scenarios

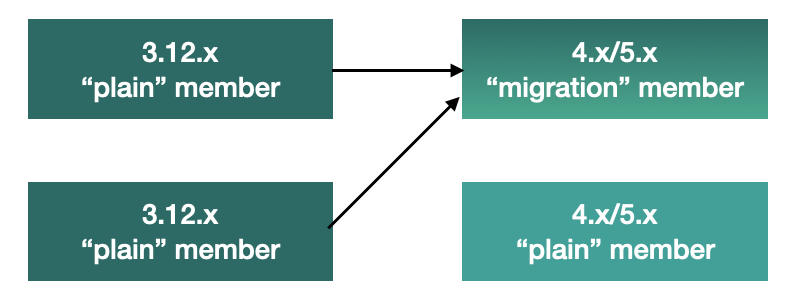

Migrating from 3.12 to 5.x (ACTIVE/PASSIVE):

The diagram above outlines how you can perform the migration from 3.12.x to a 5.x cluster. Below we describe the diagram and process in more details. In case you are using an additional 3.12.x cluster for Disaster Recovery (DR), please read WAN Event Forwarding for more information after reading this section.

-

The first step is to setup the 3.12.x cluster. You can also use your existing 3.12.x clusters. The members of the 3.12.x cluster should have a minor version of at least 3.12 (any patch version). You need to setup WAN replication between the 3.12.x and 5.x clusters as stated in the 3rd step below.

-

Next, setup a 4.x or 5.x cluster. It must have at least one migration member and all other (plain) members should be 5.x. The migration member can also be a "lite" member while the other plain members need not be. Making the migration member a "lite" member will also simplify further migration steps. The exact ratio of migration and plain members depends on your environment. In some cases it will not be possible to mix members of different "types" (or versions) and in those cases you can create a cluster comprised entirely out of migration members. In other cases, the exact ratio of member types depends on parameters such as the map and cache mutation rate on the source cluster, the WAN tuning parameters, the resources given to the 5.x cluster members, the load on the 5.x cluster that isn’t related to the migration process, if migration members are lite members or not and many more. A good rule of thumb is starting with the same number of migration members as the number of members which are usually involved in WAN replication (if you were already using WAN replication to replicate data between different clusters) or to simply use an equal number of migration and plain members and then decrease the number of migration members if testing the migration scenario shows that the decreased number can handle the migration load.

-

Add WAN replication between the two clusters. As always, this can be done using the static configuration and can be added dynamically. The key point is that the 3.12.x cluster needs to replicate only to the migration members of the 5.x cluster. Other plain members are unable to process messages from 3.12.x members and WAN replication must not replicate to these members. Other than that, the WAN replication configuration on the 3.12.x cluster is the same as any other WAN replication towards a 3.12.x cluster and no special configuration is needed. See also the WAN Replication section for the details.

-

After the clusters have been started and WAN replication has been added, you can use WAN sync for

IMapor wait until enough entries have been replicated in case ofIMaporICache. -

After enough data has been replicated to the 5.x cluster, you can shut down the 3.12.x cluster. You can check if your map data is in sync by using merkle tree sync with the caveat that in some edge cases, the merkle tree consistency check may indicate that the clusters are out-of-sync while in reality they are actually in-sync. In other cases, once WAN sync completes successfully and if there are no errors, the map data should be synchronized. See Limitations and Known Issues section for more information when using merkle trees for consistency checks.

-

Finally, you can shut down the 5.x migration members, or do a rolling restart of these members to plain members. If your 5.x cluster was comprised entirely out of migration members, you must do a rolling restart of the entire cluster. At this point, the 5.x cluster has only plain members. You can also continue using this cluster or continue onto rolling upgrade to 5.x, for instance.

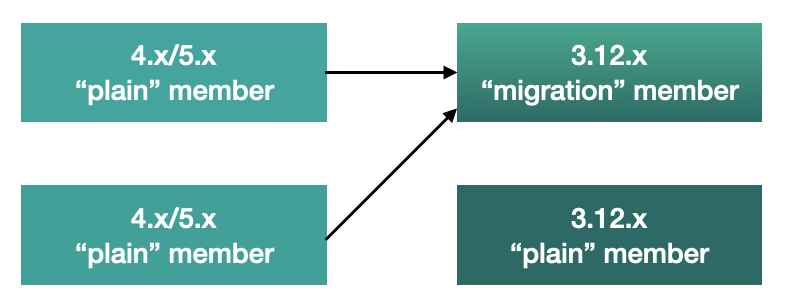

Migrating from 5.x to 3.12.x (ACTIVE/PASSIVE):

The diagram above outlines how you can perform the migration from 5.x to a 3.12.x cluster. In case you are using an additional 5.x cluster for Disaster Recovery (DR), please read the WAN Event Forwarding section for more information after reading this chapter. The process is analogous to the 3.12 → 5.x migration but we will describe the diagram and process in more details below:

-

The first step is to set up the 5.x cluster. You can also use an existing 5.x cluster. You need to set up WAN replication between the 5.x cluster and the 3.12.x cluster but this will be described soon.

-

Next, set up a 3.12.x cluster. It must have at least one migration member and all other (plain) members should have a version of 3.12 (any patch level). The migration member can also be a "lite" member while other plain members need not be. Making the migration member a "lite" member will also simplify further migration steps. The exact ratio of migration and plain members depends on your environment. In some cases it will not be possible to mix members of different "types" (or versions) and in those cases you can create a cluster comprised entirely out of migration members. In other cases, the exact ratio of member types depends on parameters such as the map and cache mutation rate on the source cluster, the WAN tuning parameters, the resources given to the 3.12.x cluster members, the load on the 3.12.x cluster which isn’t related to the migration process, if migration members are lite members or not and many more. A good rule of thumb is starting with the same number of migration members as the number of members which are usually involved in WAN replication (if you were already using WAN replication to replicate data between different clusters) or to simply use an equal number of migration and plain members and then decrease the number of migration members if testing the migration scenario shows that the decreased number can handle the migration load.

-

Set up WAN replication between the two clusters. As always, this can be done using static configuration and can be added dynamically. The key point is that the 5.0 cluster needs to replicate only to the migration members of the 3.12.x cluster. Other plain members are unable to process messages from 5.x members and WAN replication must not replicate to these members. Other than that, the WAN replication configuration on the 5.x cluster is the same as any other WAN replication towards a 5.x cluster and no special configuration is needed.

-

After the clusters have been started and WAN replication has been added, you can use WAN sync for

IMapor wait until enough entries have been replicated in case ofIMaporICache.You can check if your map data is in sync by using merkle tree sync with the caveat that in some edge cases, the merkle tree consistency check may indicate that the clusters are out-of-sync while in reality they are actually in-sync. In other cases, once WAN sync completes successfully and if there are no errors, the map data should be synchronized. See Limitations and Known Issues section for more information when using merkle trees for consistency checks. -

After enough data has been replicated to the 3.12.x cluster, you can shut down the 5.x cluster.

-

Finally, you can simply shut down the 3.12.x migration members or do a rolling restart of these members to plain members. If your 3.12.x cluster was comprised entirely out of migration members, you must do a rolling restart of the entire cluster. At this point, the 3.12.x cluster has only plain members.

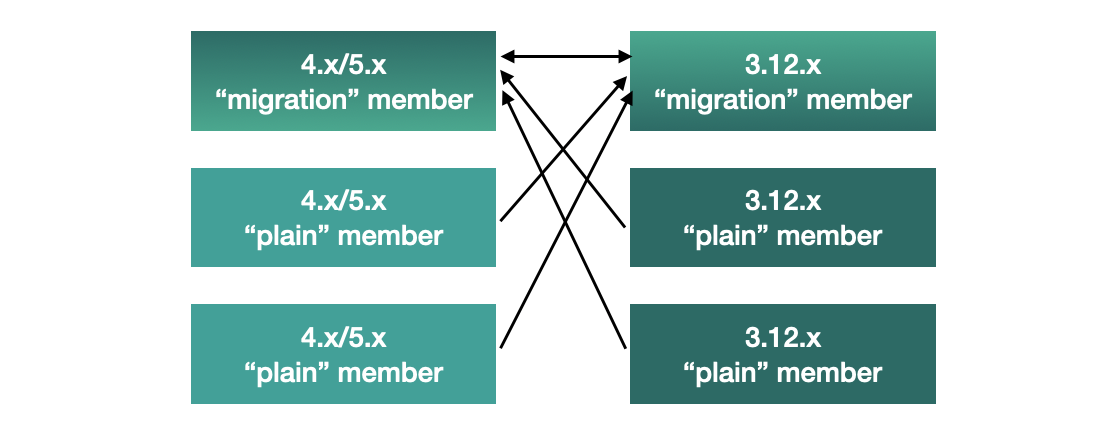

Bidirectional Migrating between 3.12.x and 5.x (ACTIVE/ACTIVE):

The diagram above outlines how you can perform a bidirectional migration between 3.12.x and 5.x. In case you are using additional 3.12.x or 5.x clusters for Disaster Recovery (DR), please read the WAN Event Forwarding section for more information after reading this chapter. The process is simply a combination of the first two scenarios:

-

The first step is to set up the 3.12.x and 5.x clusters. You can also use existing clusters. Each of these clusters must have at least one migration member. The migration member can also be a "lite" member while other plain members need not be. Making the migration member a "lite" member also simplifies further migration steps. Other plain members of the 3.12/4.x cluster can be of any patch version. The exact ratio of migration and plain members depends on your environment. In some cases, it will not be possible to mix members of different "types" (or versions) and in those cases you can create a cluster comprised entirely out of migration members. In other cases, the exact ratio of member types depends on parameters, such as the map and cache mutation rate on the source cluster, the WAN tuning parameters, the resources given to the cluster members, the load on the clusters which isn’t related to the migration process, if migration members are lite members or not and many more. A good rule of thumb is starting with the same number of migration members as the number of members which are usually involved in WAN replication (if you were already using WAN replication to replicate data between different clusters) or to simply use an equal number of migration and plain members and then decrease the number of migration members if testing the migration scenario shows that the decreased number can handle the migration load.

-

Setup WAN replication between the two clusters. As always, this can be done using static configuration and can be added dynamically. The key point is that both clusters need to replicate only to the migration members and not to the plain ones as they are unable to process messages from the members of another major version. Other than that, the WAN replication configuration is the same as any other regular WAN replication towards clusters of the same major version and no special configuration is needed.

-

After the clusters have been started and WAN replication has been added, you can use WAN sync for

IMapor wait until enough entries have been replicated in case ofIMaporICache. You can check if your map data is in sync by using merkle tree sync with the caveat that in some edge cases, the merkle tree consistency check may indicate that the clusters are out-of-sync while in reality they are actually in-sync. In other cases, once WAN sync completes successfully and if there are no errors, the map data should be synchronized. See Limitations and Known Issues section for more information when using merkle trees for consistency checks. -

After enough data has been replicated, you can shut down either of the clusters and afterwards shut down the remaining migration members or do a rolling restart of these members to plain members. If any of the clusters that you are keeping is comprised entirely out of migration members, you will need to do a rolling restart of the entire cluster.

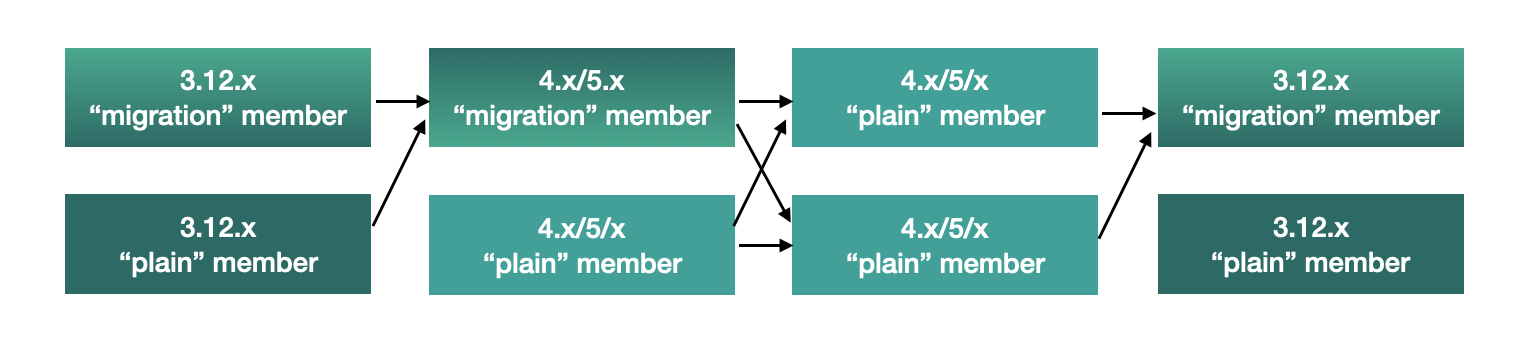

WAN Event Forwarding:

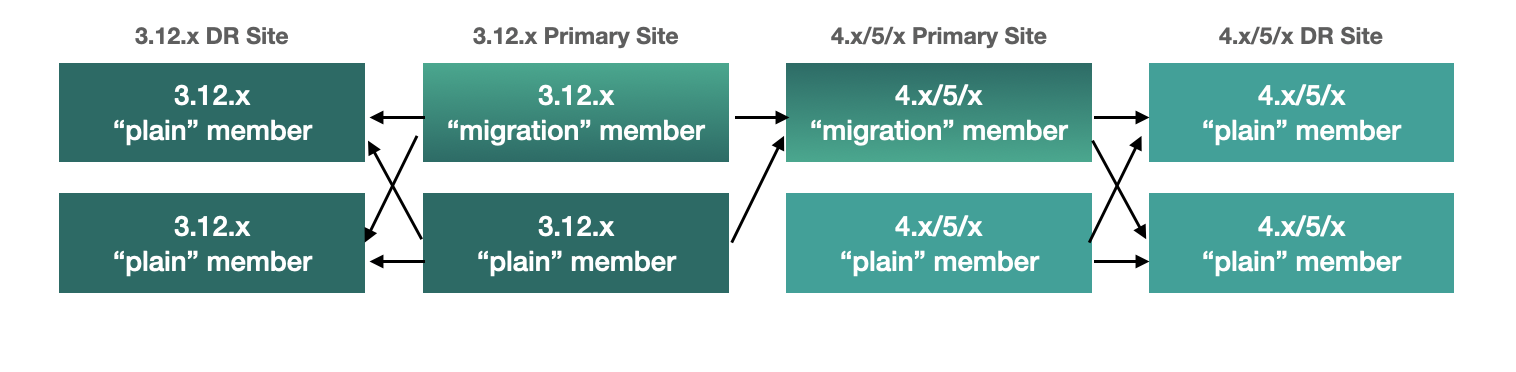

Finally, we show how clusters of different major versions can be linked so that you can form complex topologies with WAN replication. The key restrictions that you need to keep in mind when combining are as follows:

-

If you are connecting members of different major versions, the recipient/target of the connection must be a migration member and not a plain member.

-

If a cluster contains a migration member, it may also contain plain members but with the added restriction that 4.x plain members should be at least 4.0.2 and at most 4.2 (any patch version). The 3.12.x plain members can be of any patch version. Once migration has finished and migration members have been shut down, this restriction is lifted.

-

If the cluster is a source/active/sender cluster replicating towards another cluster of another major version, the source cluster must be of the minor versions 3.12 and 5.x. The patch level is irrelevant, unless the source cluster is also a target cluster for another WAN replication, where must adhere to the first two rules.

In case you were using an additional cluster for disaster recovery, you will need to set up WAN event forwarding from the migration target cluster to a new DR cluster and only after the migration process has finished may you shut down the source cluster and its' DR cluster. For example, see the following image for an example setup when migrating from 3.12.x to 5.x with additional DR clusters.

In the example above, once the migration is complete, you may shut down the 3.12.x and 5.x DR and primary sites.

Limitations and Known Issues

The solution is limited to IMap and ICache

Since we’re relying on WAN replication for migration, the data migration is restricted to migrating IMap and ICache data. In addition to this, IMap WAN replication supports WAN sync while ICache doesn’t.

The migration member needs to be able to deserialize and serialize all the received keys and values:

Since the serialized format of some classes changed between major versions,

we need to deserialize and re-serialize every key and value received from

a member from another major version. Otherwise, we might end up with two entries

in an IMap for the exact same key or we might not remove an entry even though

it was deleted on the source/active cluster. This is the task of the migration member

and it means that this member needs to have the class definition for all keys and values

received from the clusters of another major version. On the other hand, for entries received

from a cluster of the same major version, we don’t need to go through this process as we are

sure that the serialized format hasn’t changed. This saves us from spending processing time

and creating more litter for the GC to clean up.

Issues when using merkle trees and keys and values of specific classes:

The serialized format of some classes changed between 3.12.x and 5.x and merkle trees may report that there are differences between two IMaps while in fact there is none. For WAN sync using merkle trees, this means the source cluster might transmit more entries than what is necessary to bring the two IMaps in-sync. This is not a correctness issue, and the IMaps should end up with the same contents. On the other hand, a "consistency check" might always report that the two IMaps are out-of-sync while in fact the contents of the IMaps are identical.

Client Migration

Starting with Hazelcast IMDG 4.0, in addition to all the serialization changes done on the member side, there have been many changes in how the client connects and interacts with the cluster. On top of this, Hazelcast 5.x introduced new features not available in 3.12.x and removed some features that were present in 3.12.x. Because of these changes it is not possible to maintain the "illusion" of connecting to a 5.x cluster with a 3.12.x member.

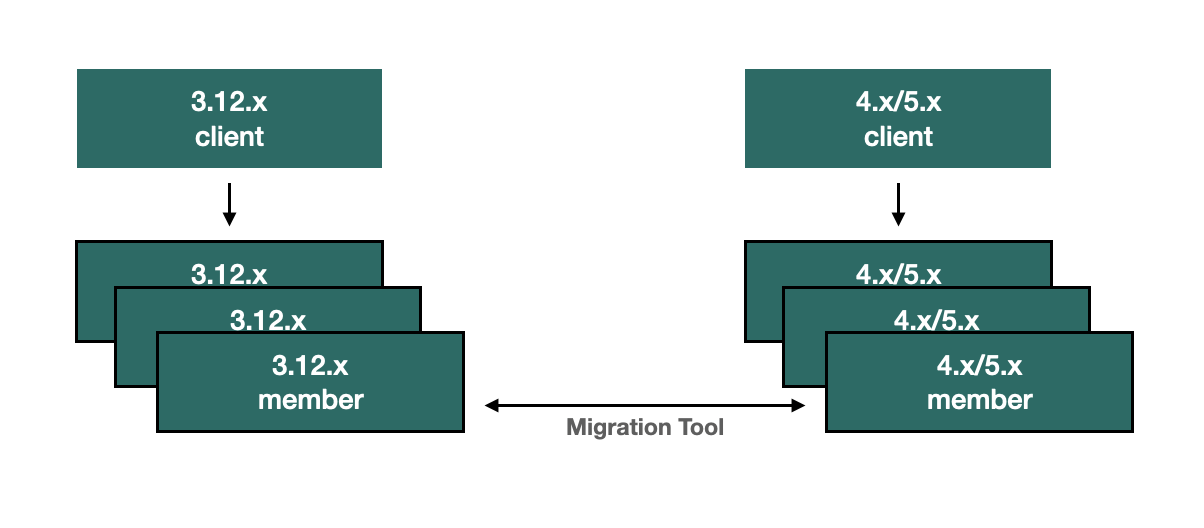

The general suggestion on approaching the migration of clients between 3.12.x and 5.x clusters is shown in the image below.

As shown, the 3.12.x clients should stay connected to the 3.12 cluster and the 5.x clients should stay connected to the 5.x cluster. The migration tool ensures that the data between 3.12.x and 5.x members is in-sync. You can then gradually transfer applications from the 3.12.x clients to applications using 4.x or 5.x clients. After all applications are using the 5.x clients and reading/writing data from/to the 5.x members, the 3.12.x cluster and the 3.12.x clients can be shut down.

The same suggestion applies when migrating back from 5.x to 3.12.x, only with the versions reversed.

Using Rolling Upgrades

For migrating between IMDG 4.x and Platform 5.0 releases, you can also use the Rolling Upgrade feature, in addition to the migration tool described above. See the Rolling Upgrades section on how to perform it.